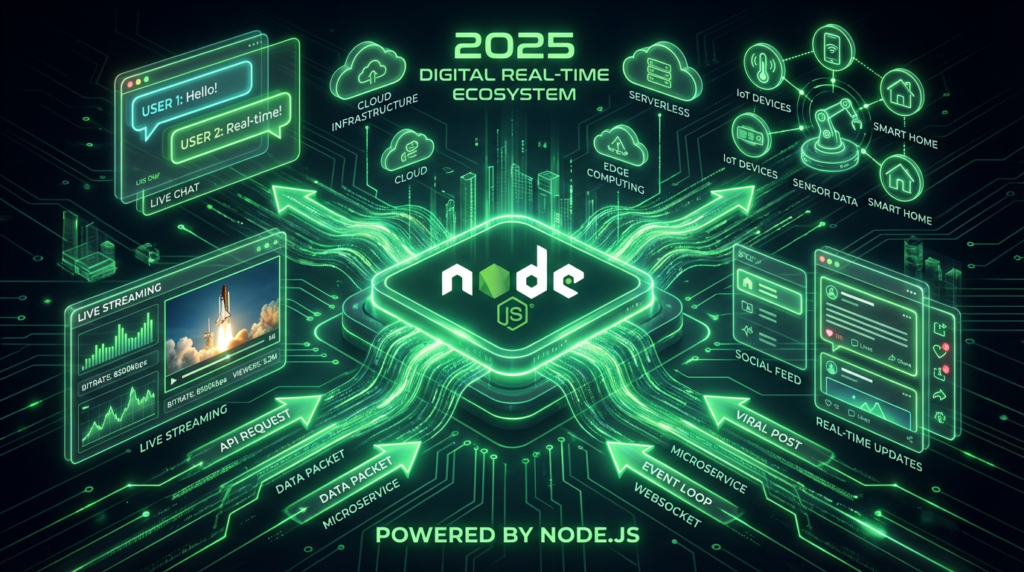

Node.js has become one of the most important tools in ultramodern web development. It powers everything from social media apps to streaming services and cloud platforms. But for newcomers, the first question is simple: What’s Node.js, and how do you start using it?

In 2025, Node.js is more important than ever. Companies want fast, scalable, real- time operations and Node.js makes that possible. This freshman-friendly companion explains Node.js in a simple, clear, and practical way so anyone can understand how it works and why it matters.

What Is Node.js?

Node.js is a runtime terrain that lets you run JavaScript outside the cybersurfer. Before Node.js was, JavaScript only worked inside the browser window. With Node.js, JavaScript can now power entire backend systems, APIs, and servers.

Node.js uses the V8 machine( the same machine powering Google Chrome). This machine converts JavaScript law into machine law, making Node.js extremely fast and effective.

In simple words

Node.js = JavaScript + Server-Side Power

This allows inventors to use one language — JavaScript — for both frontend and backend development.

Why Is Node.js Important for Beginners?

Node.js freshman-friendly and easy to learn because

- You use JavaScript, a language most newcomers formerly know.

- It has thousands of free libraries( NPM).

- It’s used far and wide — from startups to big tech companies.

- It supports real- time features like exchanges and live updates.

Learning Node.js gives newcomers a strong foundation in backend development and helps them start erecting ultramodern, scalable apps.

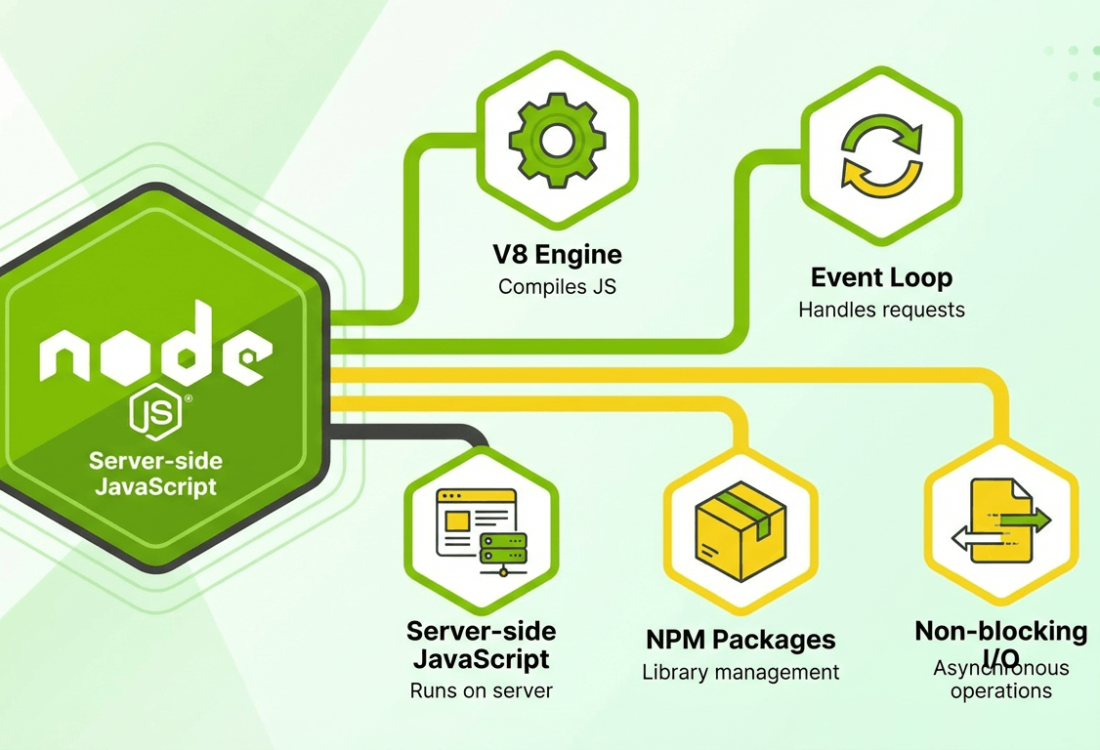

How Does Node.js Work?

Node.js works on an event- driven model. This means it handles tasks in small pieces and manages numerous requests at the same time without decelerating down.

Then are the main parts that make Node.js important

- V8 JavaScript Engine

Runs JavaScript at high speed.

- Event Loop

Allows Node.js to handle thousands of tasks efficiently.

- Non-Blocking I/ O

Input and affair operations run without stopping other processes. This is why Node.js is veritably presto.

- NPM( Node Package Manager)

A huge library of tools and packages you can install and use incontinently.

Together, these make Node.js ideal for fast operations and real- time systems.

What Can You Make with Node.js?

Node.js flexible and works well for numerous types of systems. Newcomers can make

- Chat application

- Real-time dashboard

- Social media features

- Streaming services

- RESTful APIs

- E-commerce backends

- Automation scripts

- IoT device controllers

Companies like Netflix, Uber, PayPal, and LinkedIn use Node.js because it speeds up development and improves performance.

Why Do Companies Prefer Node.js?

Businesses choose Node.js because it solves major challenges in ultramodern development.

- High Performance

The V8 machine makes Node.js extremely presto.

- Scalability

It can handle large figures of users and requests.

- Full- mound JavaScript

Brigades use one language across frontend and backend.

- Large Community

Millions of inventors maintain thousands of open- source packages.

- Real- Time Communication

Exchanges, announcements, tracking systems Node.js handles them well.

Gives companies speed, effectiveness, and inflexibility in one package.

Node.js for Beginners: How to Get Started

Let’s walk through the simple steps for learning and using Node.js.

Step 1: Install Node.js

Go to:

Download the recommended version for your operating system.

Installation includes:

- Node.js runtime

- NPM (Node Package Manager)

After installation, check your version:

node -v

npm -v

Step 2: Create Your First Node.js File

Open a folder and create a new file:

app.js

Add this code:

console.log(“Hello from Node.js”);

Run it with:

node app.js

You’ve just created your first Node.js program!

Step 3: Build a Simple Web Server

Node.js makes it easy to create servers. Here’s a basic example:

const http = require(“http”);

const server = http.createServer((req, res) => {

res.write(“Welcome to Node.js for Beginners!”);

res.end();

});

server.listen(3000, () => {

console.log(“Server running on port 3000”);

});

Run this:

node server.js

Open your browser and go to:

http://localhost:3000

You’ll see your message on the screen.

Step 4: Using NPM for Packages

NPM gives you access to millions of libraries. For example, install Express (a popular Node.js framework):

npm install express

Create a simple server with Express:

const express = require(“express”);

const app = express();

app.get(“/”, (req, res) => {

res.send(“Hello from Express!”);

});

app.listen(3000, () => {

console.log(“Server running on port 3000”);

});

This creates a cleaner, faster backend system.

Step 5: Understanding Modules

Node.js code is split into modules. You can create your own:

math.js

exports.add = (a, b) => a + b;

app.js

const math = require(“./math”);

console.log(math.add(5, 3));

Run:

node app.js

Modules make your code easier to manage.

Step 6: Working with JSON & APIs

Node.js is great for APIs. Here’s a simple example:

app.get(“/api/data”, (req, res) => {

res.json({ name: “Node.js Beginner”, year: 2025 });

});

Node.js powers millions of APIs like this across the world.

Step 7: Building Real Projects

Once you understand the basics, you can build projects like:

- To-do apps

- Login systems

- Chat apps using Socket.io

- Payment integrations

- Full-stack apps with React + Node.js

- REST APIs for mobile apps

Practice is the key to becoming confident with Node.js.

When Should Beginners Learn Node.js?

Node.js is perfect for beginners who want to start a career in:

- Full-stack development

- Backend engineering

- API development

- Cloud & DevOps

- JavaScript-based apps

- Real-time systems

Learning Node.js today prepares you for the future of web development.

Conclusion

Node.js is one of the most valuable skills for modern developers. It is fast, simple to learn, and used everywhere. With Node.js, beginners can build apps, servers, APIs, and real-time systems using just one language, JavaScript.

If you want to start your Node.js learning journey with expert guidance, APEC Training Institute offers hands-on training, real-world projects, and beginner-friendly coaching. APEC helps students and professionals learn Node.js step-by-step and build strong careers in full-stack development.

Start learning Node.js with APEC. Build skills. Build confidence. Build your future.